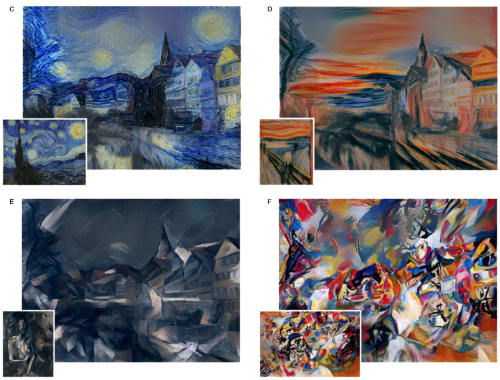

However, mastery is not immune to automation. As a profession, portraiture melted away with the invention of the Camera, which in turn became commoditized and eventually digitized. The value-add from painting had to shift to things the camera did *not* do. As a result, many artists shifted from chasing realism to capturing emotion (e.g., Impressionism), or to the fantastical (e.g., Surrealism), or to non-representative abstraction (e.g., Expressionism) of the 20th century. The use of the replacement technology, the camera, also became artistic -- take for example the emotional range of Fashion or Celebrity photography (e.g., Madonna as the Mona Lisa). The skill of manipulating the camera into making art, rather than mere illustration, became a rare craft as well -- see the great work of Annie Leibovitz.

Facebook is building towards a Metaverse version of the Internet, in both its hardware and software efforts. What are the implications? And further, how does one acquire status, work, and social capital in such a world? We explore the recent NFT avatar projects through the lens of Ivy League universities and CFA exams to understand some timeless cultural trends.

Instead, we are going to tap again into a new development in Art and Neural Networks as a metaphor of where AI progress sits today, and what is feasible in the years to come. For our 2019 “initiation” on this topic with foundational concepts, see here. Today, let’s talk about OpenAI’s CLIP model, connecting natural language inputs with image search navigation, and the generative neural art models like VQ-GAN.

Compared to GPT-3, which is really good at generating language, CLIP is really good at associating language with images through adjacent categories, rather than by training on an entire image data set.

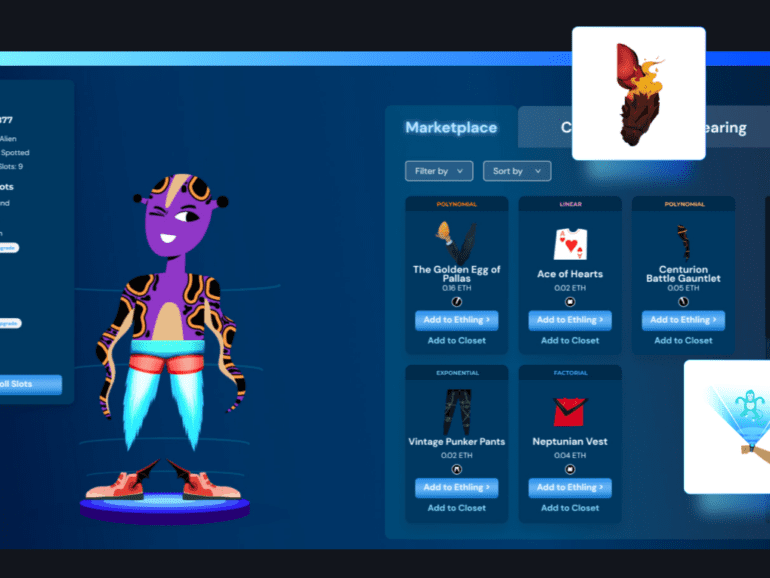

Luxury and fashion markets are structurally different from finance or commodity markets in that they seek to limit supply in order to generate value. This increases price and social status. We can analogize these brand dynamics to what is happening in NFT digital object markets and better understand their function as a result.

We’re not cool. That’s why we’re in finance.

But people want to be cool. As highly social and intelligent animals, we want and need to belong, differentiate against each other, and negotiate for status. We create signals and hierarchies to create pockets of relational capital, which we then cash in for real world benefits.

Such mammalian realities are contrary to the economic rendering of the homo economicus, the abstracted rational agent making choices in financial models. In 2021, our financial models are waking up and instantiating themselves, becoming Decentralized Autonomous Organizations (DAOs), spun up by DeFi and NFT industry insiders, and implemented into commercial actions onchain.

We’ve had this write-up in some various mental states floating around for a while, and better done than perfect. So treat this as a core idea to be fleshed out later.

Payments and banking companies should be looking at how people purchase and store digital goods and digital currency in video games. That experience has been polished over 40 years, and is what will be the default expectation for future generations.

For those interested, here is a website that collects user experiences of shopping across hundreds of designs.

OpenAI, backed with $1B+ by Elon Musk & MSFT, can now program SQL and write Harry Potter fan-fiction

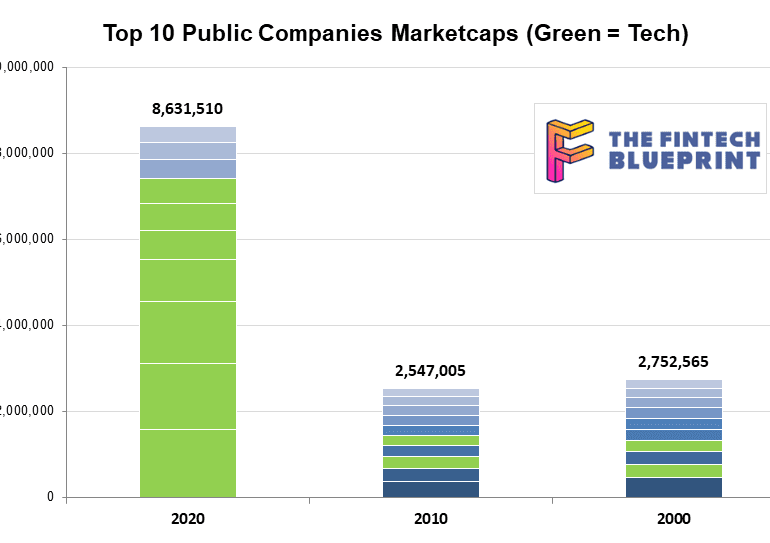

This week, we look at a breakthrough artificial intelligence release from OpenAI, called GPT-3. It is powered by a machine learning algorithm called a Transformer Model, and has been trained on 8 years of web-crawled text data across 175 billion parameters. GPT-3 likes to do arithmetic, solve SAT analogy questions, write Harry Potter fan fiction, and code CSS and SQL queries. We anchor the analysis of these development in the changing $8 trillion landscape of our public companies, and the tech cold war with China.

The evolution towards a financial metaverse is rapidly accelerating, with the growth in generative assets, profile picture avatars, the emerging derivative structures that build on their foundation, and DAOs that govern them. This article highlights the most novel developments, and builds the case for what a digital wallet / bank will need to be able to do in order to succeed on the way to this alien destination.

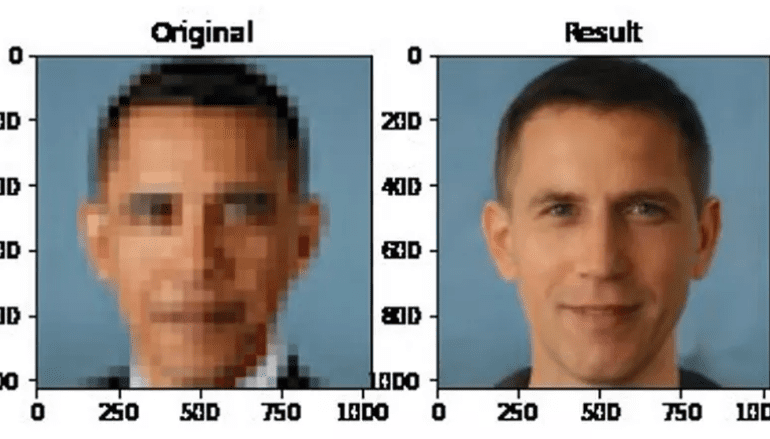

What we know intuitively, and what the software shows, is that the pixelated image can be expanded into a cone of multiple probable outcomes. The same pixelated face can yield millions of various, uncanny permutations. These mathematical permutations of our human flesh exit in an area which is called “latent space”. The way to pick one out of the many is called “gradient descent”.

Imagine you are standing in an open field, and see many beautiful hills nearby. Or alternately, imagine you are standing on a hill, looking across the rolling valleys. You decide to pick one of these valleys, based on how popular or how close it is. This is gradient descent, and the valley is the generated face. Which way would you go?

The image is taken from an AI paper which explains how to use generative adversarial networks (i.e., GANs) to hallucinate hyper realistic-imagery. By training on hundreds of thousands of samples, the model is able to create candidates representing things like “just a normal dude holding a normal fish nothing to see here”, and then edit out the ones that are too egregious.

The reason the stuff above is so scary is actually that you can mathematically transition in the space between images. So for example, you could move between “a normal dude” and “just a normal fish” and have nightmare fish people. Or you could create a DNA root for an image which is part dog, part car, and part jellyfish. Check out the video below and the very accessible https://www.artbreeder.com/ website to see what I mean.